Photogrammetry, NeRF and Gaussian splatting all use a 3D point cloud obtained from a variety of methods, the most common being a series of angled photographs, and then using that point cloud to reconstruct a 3D scene digitally. The processes, outcomes and usages of each technology are what differentiate them. From the level of realism achieved, to whether the technology can generate novel areas, to its speed and memory usage – this article aims to set up a foundational level understanding of the unique features of each and in what circumstances you would use them.

Photogrammetry

In photogrammetry, a series of angled, overlapping photographs are used to map out a 3D point cloud (a group of 3D co-ordinates) which is then used to create a mesh. Since photogrammetry uses triangulation, only two angled photographs are needed at its most basic level, but the more angles you provide photogrammetry software, the more accurate the point cloud will be. The main advantages of this technique is its accuracy, light computational load and the widespread compatibility of 3D meshes in industries such as animation, gaming, and AR. Photogrammetry however requires your photographs to have static, identifiable points – which means featureless areas such as skies, bodies of water and transparent surfaces often struggle to be generated at all, leaving gaps in the computated mesh. Luckily, the two newer technologies below resolve this problem.

Image Credit: Capturing Reality

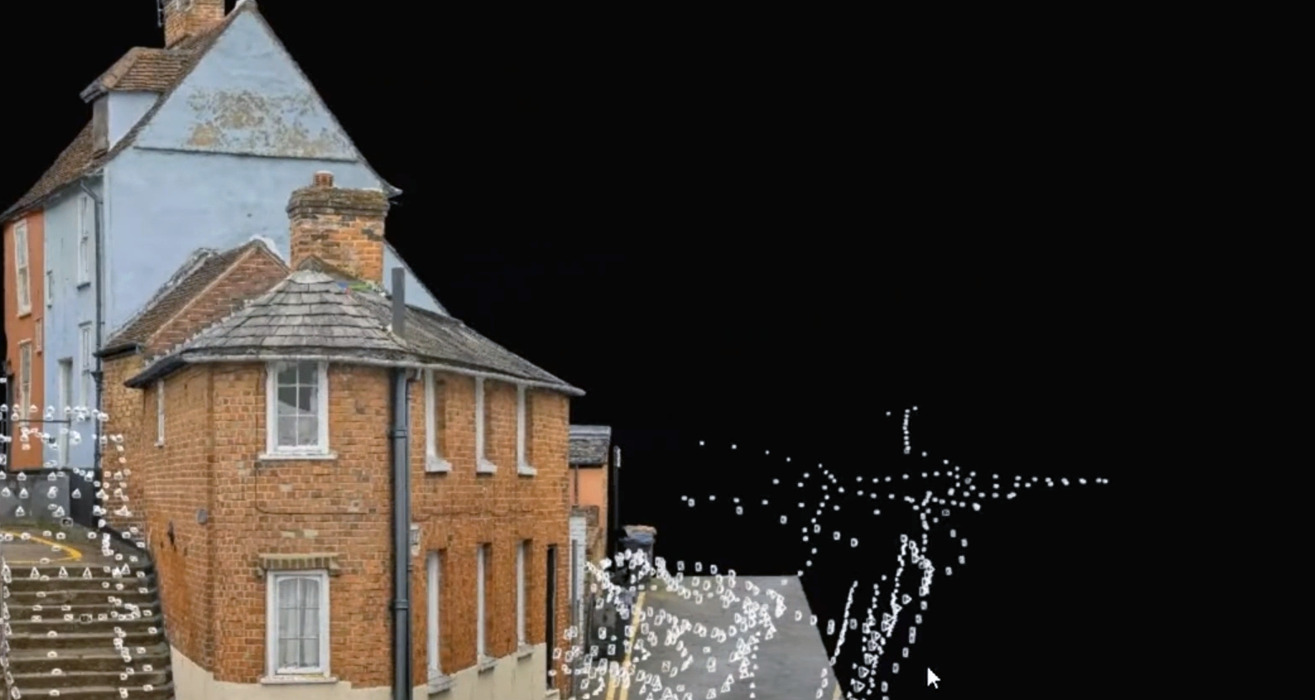

Gaussian Splatting

In gaussian splatting, the 3D point cloud generated from a series of angled photos is not used to create a mesh – instead, each point is used to directly render the final image by turning those points into a gaussian. While points only contain 3D co-ordinates and colour data, gaussians include two more sets of data: transparency and covariance, which refers to its 3D shape as an ellipsoid. By blending millions of gaussians together, we get a smoother, more photorealistic representation of a 3D space that can be generated in real time. However, this process is extremely taxing on memory, and with most digital industries currently working with just meshes, the usage for gaussian splatting is very limited – for now. Due to its ability to render featureless areas and extremely fine details like hair, we could see gaussian splatting being used for digital showrooms, VR worlds, and just about anything where hyperrealism is desired.

See Photogrammetry, NeRF and Gaussian Splatting in action!

NeRF

In NeRF, the differently angled photographs you provide serve as training data for the underlying neural network to learn from and consequently predict the radiance, or the transparency and colour data, of any given 3D point. Since prediction is used to create the radiance field, NeRF can generate completely novel views, that is, you don’t need to provide a great level of overlap between photos – NeRF can fill in the gaps for you! What’s more, this technology doesn’t need to work with identifiable points like photogrammetry does, so transparent, shiny and featureless areas can all be recreated with great accuracy. With meshes being the most integrated type of 3D representation data however, NeRF currently suffers from the same limitations gaussian splatting does, along with the extra burden of slow rendering times. But corporations such as Google have already integrated the technology into their “Immersive Street View” option – and as processing times and compatibility continue to improve, NeRF could completely revolutionize 3D modelling as we know it.

Image Credit: Olli Huttunen

If you would like to learn more about these exciting technologies, feel free to contact us at hello@synima.com.